FinQA Final Presentation

Q&A system for financial reports and analyst reviews

Xianghui Xin

2023-37252

Julian Felix Kieslinger

2025-82736

Jiayue Wang

2022-21806

Team: 😈🔥

Date:

https://finqa-hallumaker-final.pages.dev

Presentation Outline

- Team Summary

- Overall Strategy & Architecture

- Results Evolution: From Baseline to Final

- Key System Enhancements

- Difficulties Encountered

- Demos

Team Summary

| Member | Role | Key Contributions |

|---|---|---|

| Xianghui Xin | Project Lead | Overall system architecture, Dynamic Question Categorization, Difficulty-based strategy implementation, Agent prompt optimization, Error analysis, Presentation materials. |

| Julian Kieslinger | Evaluation & Domain Insights | Model evaluation framework, Financial tool definition & testing, Question decomposition, Question keyword filtering, Github CI workflow. |

| Jiayue Wang | Data & Tooling | Retrieval improvement (retrieval scope extension, temporal alignment, question rewriting, and document reranking), Math & Fin tool extensions, Inference scaling, Result verifier component, Document embedding strategies. |

Collaboration Strategy

- Weekly team meetings to discuss progress

- Task assignment based on expertise

- Regular code reviews and pair programming

Overall Strategy & Architecture

flowchart LR %% Main flow User([User Query]) --> Client["MCP Client (MultiServerMCPClient)"] %% Client to Router communication Client -->Router["Question Router (Strategy Selector)"] %% Router to Strategy selection Router -->|"Applies strategy based on level"| ReAct["ReAct Agent (LangGraph)"] %% Server section with detailed communication subgraph Servers["MCP Servers (Tool Providers)"] direction LR ReAct -->|"Tool calls"| Math["Math Server"] Math -->|"Calc results"| ReAct ReAct -->|"Tool calls"| Finance["Finance Server"] Finance -->|"Financial metrics"| ReAct ReAct -->|"Search queries"| Chroma["Chroma Server"] Chroma -->|"Relevant chunks"| ReAct ReAct -->|"SQL queries"| SQLite["SQLite Server"] SQLite -->|"Structured data"| ReAct end %% Database connections subgraph Databases["Data Sources"] direction LR Chroma -->|"Vector search"| ChromaDB[(Vector DB)] SQLite -->|"SQL queries"| CompanyDB[(Company DB)] end %% Verification and Final answer flow ReAct -->|"Agent Execution Logs (Multiple Runs)"| Verifier["Result Verifier"] Verifier -->|"Verified & Synthesized Answer"| Answer([Final Answer]) %% Styling classDef userNode fill:#ffebee,stroke:#c62828,color:#c62828,rx:20,ry:20; classDef clientNode fill:#e8eaf6,stroke:#3f51b5,color:#3f51b5,rx:10,ry:10; classDef reactNode fill:#b2dfdb,stroke:#00796b,color:#00796b,rx:10,ry:10; classDef serverNode fill:#f5f5f5,stroke:#424242,color:#424242,rx:5,ry:5; classDef dbNode fill:#ede7f6,stroke:#4527a0,color:#4527a0,rx:0,ry:0; classDef answerNode fill:#e8f5e9,stroke:#2e7d32,color:#2e7d32,rx:20,ry:20; classDef verifierNode fill:#fff9c4,stroke:#f57f17,color:#f57f17,rx:10,ry:10; classDef subgraphStyle fill:#fafafa,stroke:#9e9e9e,stroke-width:1px,rx:10,ry:10,color:#424242; %% Apply styles class User userNode; class Client clientNode; class Router clientNode; class ReAct reactNode; class Math,Finance,Chroma,SQLite serverNode; class ChromaDB,CompanyDB dbNode; class Verifier verifierNode; class Answer answerNode; class Servers,Databases subgraphStyle;

Core Orchestration (mcp_client.py)

The Engine Room: Powering FinQA

1. Multi-Server Initialization

async with MultiServerMCPClient({

"math": {

"command": "python",

"args": ["./servers/math_server.py"], ...

},

"fin": {

"command": "python",

"args": ["./servers/fin_server.py"], ...

},

"chroma": {

"command": "python",

"args": ["./servers/chroma_server.py"], ...

},

"sqlite": {

"command": "python",

"args": ["./servers/sqlite_server.py"], ...

}

}) as client:

# ... main processing loop ...

2. Flexible Question Processing

# Args: --first N, --last N, --all

# if args.first:

# qa_dict = qa_dict[:args.first]

for i, item in enumerate(qa_dict):

question = item['Question']

print(f"Processing Q{i+1}: {question}")

level = await categorize_question_with_llm(

question, categorization_model)

# ... strategy selection ...

3. Multi-Run Strategy & Verification

temp_list = []

for _ in range(3): # Multi-run execution

temp_result = await router.process_question(

question,

client=client,

model=answering_model,

recursion_limit=level_config['recursion_limit']

)

extracted_log = result_verifier.extract_info(

question, temp_result)

temp_list.append(extracted_log)

# Verify and select the best answer

results_list[i] = await result_verifier.verify_results(

question, temp_list)

Impact: Robust orchestration for dynamic strategy application and reliable answer generation through multi-path reasoning.

Results Evolution: From Baseline to Final

Baseline Performance (Initial)

$ python score.py

Overall Accuracy: 0.2600 (13/50)

...Our initial execution resulted an accuracy of 26%.

Initial Error Analysis

- Hallucination - LLM generating incorrect financial data

- Tool Selection - Choosing wrong tools for specific tasks

- Temporal Confusion - Mixing data from different fiscal years

- Complex Calculations - Errors in multi-step financial formulas

Midterm: Impact of Question Categorization

Improved Performance (Post-Categorization)

Overall Accuracy: 0.3400 (17/50)

Question Distribution:

Level 1: 16 questions - Accuracy: 0.6250 (10/16)

Level 2: 8 questions - Accuracy: 0.2500 (2/8)

Level 3: 2 questions - Accuracy: 1.0000 (2/2)

Level 4: 12 questions - Accuracy: 0.2500 (3/12)

Level 5: 12 questions - Accuracy: 0.0000 (0/12)

Level and Correctness Summary:

levels: 12111 11111 42421 42324 23112

21111 55455 55444 55555 44454

answer: oxoox oooxo xooxx xooxo xooox

xxoxx xxxxx xxxox xxxxx xxxxx

Number of Quesiton hitting recursion limit: 26Final: Advanced Prompting & Verification

Current Performance (Final Overview)

Overall Accuracy: 0.6200 (31/50)

Question Distribution:

Level 1: 16 questions - Accuracy: 0.7500 (12/16)

Level 2: 8 questions - Accuracy: 0.7500 (6/8)

Level 3: 2 questions - Accuracy: 1.0000 (2/2)

Level 4: 11 questions - Accuracy: 0.6364 (7/11)

Level 5: 13 questions - Accuracy: 0.3077 (4/13)

Final Results: Detailed Analysis

Level and Correctness Summary

levels: 12111 11111 42221 44324 23111

21121 55555 55444 55555 44454

answer: oooox ooooo oooxx oxooo oooox

xxooo ooxox xoooo xxxxx xoxxx

(o = correct, x = incorrect)Error Analysis

=============================

ERROR ANALYSIS

=============================

Multi Doc Failure 8 (16.0%)

Wrong Calculation 6 (12.0%)

System Error 2 ( 4.0%)

Incomplete Data 2 ( 4.0%)

Format Error 1 ( 2.0%)Error Distribution

Key System Enhancements

Overall Categorization & Strategy Flow

flowchart LR

A[Incoming Question] --> B[LLM Question Analyzer]

B --> C{Difficulty Assessment}

C -->|"Simple fact retrieval

(single data point lookup)"|L1[Level 1]

C -->|"Simple calculations

on single document"|L2[Level 2]

C -->|"Multi-step calculations

or temporal reasoning"|L3[Level 3]

C -->|"Calculations involving

multiple documents/companies"|L4[Level 4]

C -->|"Complex reasoning with

multiple factors/filtering"|L5[Level 5]

L1 --> P1[Simple RAG Strategy]

L2 --> P1

L3 --> P2[Tool-First Strategy]

L4 --> P2

L5 --> P3[Agentic RAG Strategy]

P1 -->|"Direct retrieval +

concise numerical answer"|Result1[Answer]

P2 -->|"Extract metrics →

Temporal alignment →

Retrieve data → Calculate"|Result2[Answer]

P3 -->|"Entity identification →

Data gathering →

Calculation → Comparison"|Result3[Answer]

classDef level1 fill:#e0f7fa,stroke:#0288d1,color:#000;

classDef level2 fill:#e8f5e9,stroke:#2e7d32,color:#000;

classDef level3 fill:#fff3e0,stroke:#fb8c00,color:#000;

classDef level4 fill:#ede7f6,stroke:#5e35b1,color:#000;

classDef level5 fill:#ffebee,stroke:#d32f2f,color:#000;

classDef process fill:#f5f5f5,stroke:#333,stroke-dasharray:4 2,color:#000;

classDef llm fill:#f8bbd0,stroke:#880e4f,color:#000;

classDef defaultNode fill:#eee,stroke:#333,color:#000;

class L1 level1;

class L2 level2;

class L3 level3;

class L4 level4;

class L5 level5;

class P1,P2,P3 process;

class B,C llm;

class A,Result1,Result2,Result3 defaultNode;

flowchart LR

A[Incoming Question] --> B[LLM Question Analyzer]

B --> C{Difficulty Assessment}

C -->|"Simple fact retrieval

(single data point lookup)"|L1[Level 1]

C -->|"Simple calculations

on single document"|L2[Level 2]

C -->|"Multi-step calculations

or temporal reasoning"|L3[Level 3]

C -->|"Calculations involving

multiple documents/companies"|L4[Level 4]

C -->|"Complex reasoning with

multiple factors/filtering"|L5[Level 5]

L1 --> P1[Simple RAG Strategy]

L2 --> P1

L3 --> P2[Tool-First Strategy]

L4 --> P2

L5 --> P3[Agentic RAG Strategy]

P1 -->|"Direct retrieval +

concise numerical answer"|Result1[Answer]

P2 -->|"Extract metrics →

Temporal alignment →

Retrieve data → Calculate"|Result2[Answer]

P3 -->|"Entity identification →

Data gathering →

Calculation → Comparison"|Result3[Answer]

classDef level1 fill:#e0f7fa,stroke:#0288d1,color:#000;

classDef level2 fill:#e8f5e9,stroke:#2e7d32,color:#000;

classDef level3 fill:#fff3e0,stroke:#fb8c00,color:#000;

classDef level4 fill:#ede7f6,stroke:#5e35b1,color:#000;

classDef level5 fill:#ffebee,stroke:#d32f2f,color:#000;

classDef process fill:#f5f5f5,stroke:#333,stroke-dasharray:4 2,color:#000;

classDef llm fill:#f8bbd0,stroke:#880e4f,color:#000;

classDef defaultNode fill:#eee,stroke:#333,color:#000;

class L1 level1;

class L2 level2;

class L3 level3;

class L4 level4;

class L5 level5;

class P1,P2,P3 process;

class B,C llm;

class A,Result1,Result2,Result3 defaultNode;

1. Question Understanding & Strategic Routing

A. LLM-Powered Categorization

What: Uses gpt-4o-mini to classify questions into 5 difficulty levels based on a detailed prompt.

Prompt Snippet:

"You are an expert in financial analysis...

Categorize into: LEVEL_1 (fact retrieval), LEVEL_2

(simple calc),... LEVEL_5 (complex reasoning)

Respond with ONLY a single number (1-5)"Impact on Agent:

- Enables selection of the most resource-efficient and effective strategy.

- Avoids over-complication for simple queries and under-powered approaches for complex ones.

- Sets the stage for tailored agent behavior in subsequent steps.

B. Level-Specific Strategy Prompts (question_router.py)

Based on the category, a ReAct agent is invoked with a highly tailored prompt to guide its reasoning, tool use, and efficiency.

- Goal: Fast, direct retrieval.

- Prompt: "MAXIMUM 25 STEPS... Provide ONLY exact numerical answer..."

- Agent Behavior: Minimal steps, immediate answering.

- Goal: Structured calculation.

- Prompt: "OPTIMIZED ANALYTICAL PROCESS: EXTRACT, SEARCH, CALCULATE..."

- Agent Behavior: Methodical data extraction & tool use.

- Goal: Complex, multi-data reasoning.

- Prompt: "STREAMLINED APPROACH: IDENTIFY, SEARCH (ALL data upfront)... NO VERIFICATION"

- Agent Behavior: Broader data gathering before calculation.

2. Targeted Optimizations & Verification

A. Retrieval improvement

Query Rewriting:

- Retrieval scope extended (+ 5 years).

- Lookup Table-Based Fiscal Year-End Extraction.

- LLM-based normalization of relative time (e.g., "prior year" to "202X").

- LLM-based question rewriting into a formal financial language format.

- LLM-basedGeneration of multiple search queries related to the initial query.

- Impact: Reduced temporal confusion, more accurate data retrieval for time-sensitive queries.

Document reranking:

- Reciprocal rank fusion algorithm

$$\text{Score}(d) = \sum_{i=1}^{N} \frac{1}{k + \text{rank}_i(d)}$$

- A document may appear in multiple lists, and the final fused score is the sum of the reciprocal ranks from all the lists where it appears.

- $\text{rank}_i(d)$ is the rank of focument d in the ith ranked list;

- k is a constant (e.g., 60);

- N is the number of ranked lists.

B. Tool Extension

- calculate_eps

- calculate_operating_profit_margin

- calculate_current_ratio

- calculate_cashflowfromoperations

- calculate_shares_outstanding

- calculate_price_to_earnings_ratio

- calculate_market_capitalization

- calculate_ratio_of_metrics

- add

- multiply

- divide

- difference

- average

- sum_values

- convert_thousands_to_number

- convert_millions_to_number

- convert_billions_to_number

- calculate_percentage

- calculate_percentage_change

- average_list

C. Result Verification

- Large language monkeys: scaling inference compute with repeated sampling (3 times).

- A verifier LLM compares these answers and generate the final results.

Verifier Prompt Snippet:

"You are an expert verifier...

Compare multiple answers... Identify inconsistencies...

Select most coherent reasoning path ...

Or synthesize the best aspects of them into a final...

Final Verified Answer: ..."Impact:

- Crucial quality control.

- Mitigates single-run errors.

- Boosts reliability by cross-checking reasoning paths.

Result Verifier Workflow

flowchart TD subgraph Agent Run 1 A1["Question"] --> P1["Agent Processing 1 (Tools, Reasoning)"] --> O1["Output Log 1 (Answer, Tool Calls, Responses)"] end subgraph Agent Run 2 A2["Question"] --> P2["Agent Processing 2 (Tools, Reasoning)"] --> O2["Output Log 2 (Answer, Tool Calls, Responses)"] end subgraph Agent Run 3 A3["Question"] --> P3["Agent Processing 3 (Tools, Reasoning)"] --> O3["Output Log 3 (Answer, Tool Calls, Responses)"] end O1 --> VerifierLLM["Verifier LLM (gpt-4.1-mini)"] O2 --> VerifierLLM O3 --> VerifierLLM VerifierLLM -->|"Comparison & Synthesis"| FinalAnswer["Final Verified Answer"] classDef agentRun fill:#e3f2fd,stroke:#1e88e5,color:#000; classDef verifier fill:#fff9c4,stroke:#f57f17,color:#000; classDef io fill:#fce4ec,stroke:#ad1457,color:#000; classDef answerNode fill:#e8f5e9,stroke:#2e7d32,color:#000; class P1,P2,P3 agentRun; class O1,O2,O3 io; class VerifierLLM verifier; class FinalAnswer answerNode;

3. Engineering for Iteration & Quality

A. Per-Question Correctness Tracking

score.py generates a concise visual summary showing the assigned difficulty level and correctness (o/x) for every question in the batch.

Level and Correctness Summary (Output from score.py):

levels: 12121 11111 42421 42324 24222

22121 54445 55444 55555 44454

answer: oooox ooooo oooox oooxo oooox

xxooo xxxoo xxoxo xxxxx oxxxx

(o = correct, x = incorrect)

Impact: Provides an at-a-glance overview of performance across different question types and individual questions, crucial for comparing experiment runs and quickly spotting error patterns or regressions.

B. LLM-Enhanced Error Classification

When simple pattern matching is insufficient, score.py leverages an LLM (gpt-4o-mini via error_classification_chain) for nuanced error analysis based on the question, correct answer, and LLM response.

# In score.py, within classify_error_with_ground_truth:

# ... (initial pattern-based checks) ...

# Fallback to LLM for complex cases:

llm_classification = error_classification_chain.invoke({

'question': question_text,

'correct_answer': actual_answer,

'llm_response': model_output,

'level': question_level

})

# Expected output from LLM (ErrorType Pydantic model):

# {

# "error_category": "wrong_retrieval",

# "error_subcategory": "selected_wrong_document_section",

# "confidence": 0.85,

# "reasoning": "The LLM retrieved data for a similar but incorrect metric."

# }

Impact: Provides deeper insights into failure modes beyond surface-level symptoms, guiding more targeted prompt and logic refinements. The structured JSON output aids in aggregating error statistics.

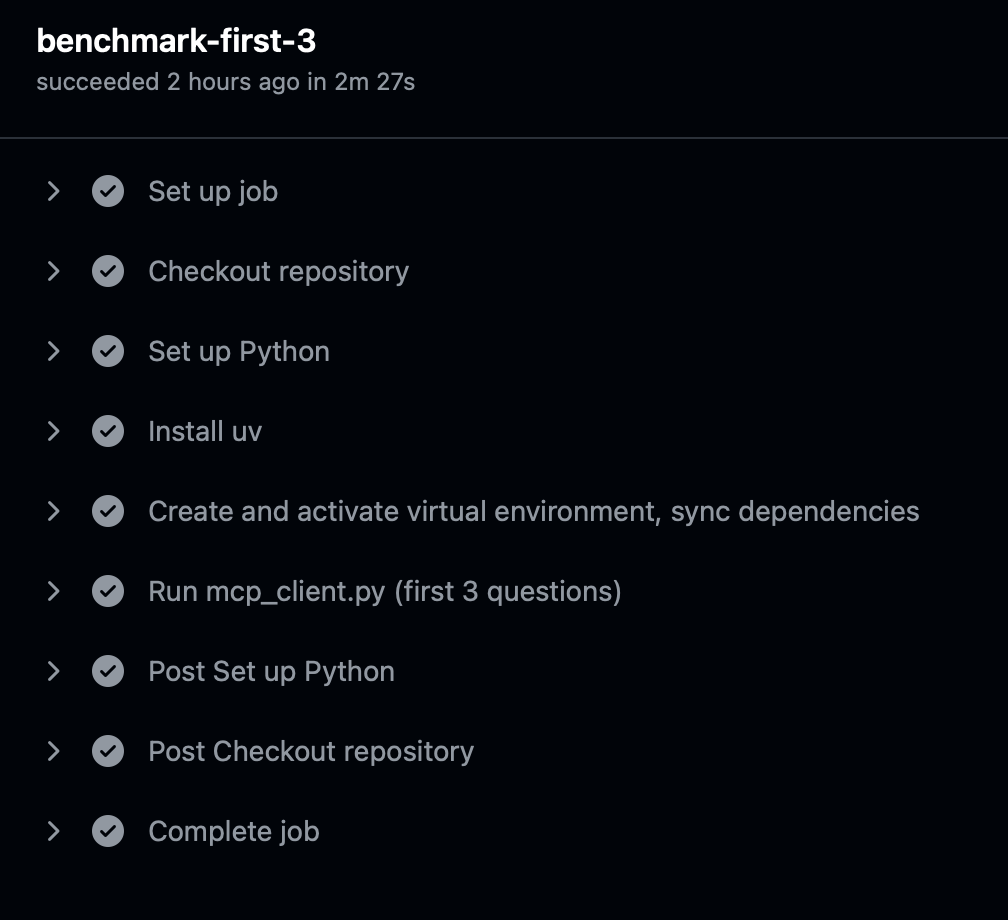

C. Automated & Flexible Evaluation with GitHub Actions

Command-line arguments in mcp_client.py (--first N, --last N, --all) enable targeted testing. These are leveraged by GitHub Actions for automated evaluation:

qa_dict_benchmark-first-3.yml: (Manual Trigger) Runs on the first 3 questions for a quick smoke test of major changes.qa_dict_benchmark-last-15.yml: (Manual Trigger) Tests the typically more complex last 15 questions.qa_dict_benchmark-all.yml: (On Push) Performs a full benchmark on all 50 questions to track overall performance and catch regressions.

Impact: Streamlined the testing pipeline, enabled quicker iteration, ensured consistent evaluation, and helped maintain code quality through automated checks.

Difficulties Encountered (What Didn't Work)

❌ Model & Embedding Experiments

- Temperature and Model Selection: Different models and non-zero temperatures led to inconsistent tool use and hallucinations.

- Embedding Parameters: Tuning chunk size, overlap, and table format did not significantly improve accuracy.

❌ Strategy & Architecture Attempts

- Keyword Augmentation: Adding financial keywords to queries often introduced noise.

- Pure RAG Approach: Failed on calculation-heavy questions requiring tools.

- Complex Prompt Chaining: Increased errors and execution time for no significant gain.

- Dynamic Routing Adjustments: Created inconsistent and unpredictable agent behavior.

Demo 1: FinQA Pipeline Execution

Demo 2: Error Analysis & Debugging

Demo 3: GitHub Actions CI/CD Pipeline

Thank You

Questions?

This presentation was created with reveal.js, an HTML presentation framework.